Sunday, June 17, 2012

Kyle Wiens (of iFixit) doesn't like the new MacBook Pro:

The Retina MacBook is the least repairable laptop we’ve ever taken apart: unlike the previous model, the display is fused to the glass—meaning replacing the LCD requires buying an expensive display assembly. The RAM is now soldered to the logic board—making future memory upgrades impossible. And the battery is glued to the case—requiring customers to mail their laptop to Apple every so often for a $200 replacement. ... The design pattern has serious consequences not only for consumers and the environment, but also for the tech industry as a whole.

And he blames us:

We have consistently voted for hardware that’s thinner rather than upgradeable. But we have to draw a line in the sand somewhere. Our purchasing decisions are telling Apple that we’re happy to buy computers and watch them die on schedule. When we choose a short-lived laptop over a more robust model that’s a quarter of an inch thicker, what does that say about our values?

Actually, what does that say about our values?

First of all, "short-lived" is arguable, and I'd argue for "flat-out wrong". I don't take enough laptops through to the end of their life to be a representative sample, but I've purchased two PC laptops and two MacBook Pros. After two years of use, both PCs were essentially falling apart (hinges, power cords, and basically dead batteries) while the MacBooks were running strong.

My 2008 MacBook Pro did get a not-totally-necessary battery replacement after a year, but my 2010 has run strong for two years. I'd expect nothing less from the Airs or new MacBook Pro. So short-lived might be a relative characterization if anything, and only if you consider the need to pay Apple to replace your battery instead of doing it yourself a "death".

Second, and more important, this thought occurred to me: when we look at futuristic computing devices in movies such as Minority Report or Avatar, do we think "Neat, but those definitely don't look upgradeable. No thanks."

Do we imagine that such progress is achieved through the kind of Luddite thinking that leads people to value "hackability" over never-before-achieved levels of precision and portability?

The quote above is the summation of Wiens' argument that "consumer voting" has pushed Apple down this road, and that we need to draw a line in the name of repairability, upgradability and hackability.

I'd argue that Apple's push toward devices that are more about the human interface and less about the components is a form of a categorical imperative, a rule for acting that has no conditions or qualifications — that there is no line, there is only an endless drive towards progress: more portable devices that get the job done with less thinking about the hardware.

That is what drives descriptions like Apple uses in its product announcements: magical, revolutionary — not hacking and upgrading.

(Link via Daring Fireball)

Tuesday, June 5, 2012

I'm writing this post from Chrome 19.

Yes, nineteen. Zero is another significant number: the number of times I've downloaded the new version of Chrome.

Jeff Atwood wrote about this phenomenon, and the eventual paradise of the infinite version a year ago, stating that the infinite version is a "not if — but when" eventuality.

Chrome is at one (awesome) end of the software upgrade continuum. I've rarely noticed an update to Chrome, and I've rarely checked the version. A couple of months ago I did, when a Facebook game I was working on suddenly started crashing consistently. The problem was tracked to a specific version in Chrome, and within hours, I heard that an update was released. I checked my version again, and Chrome had already been updated with the fix.

This nearly version-free agility has allowed Chrome to innovate a pace not matched by IE, Firefox, or even Safari. I swear the preferences pane has changed every time I open it (not necessarily a good thing).

At the other end you have the enterprise dinosaurs; the inventory, procurement, accounting systems that are just impossible to get rid of — I'm thinking of one local furniture retailer which still uses point-of-sale and inventory systems with black/green DOS-looking screens on the sales floor.

Most other software industries fall somewhere in between, trying to innovate, update, or even just survive while still paying the backward-compatibility price for technical decisions made in years past.

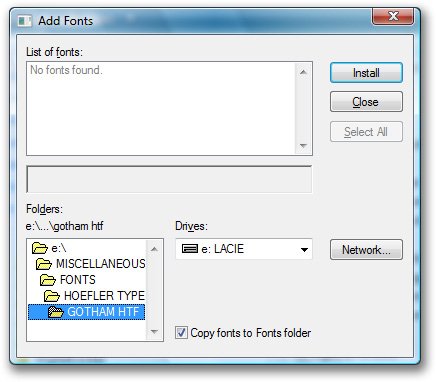

Check out this gem alive and well (erm, alive, at least) in Windows Vista:

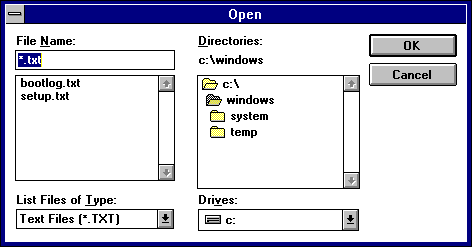

Look familiar? That file/directory widget has seen better days; it made its debut in 1992 with Windows 3.1:

Supporting legacy versions is not just a technical problem, though. For some companies and products it's just in their nature to be backward compatible. And sometimes to great success; take Windows in the PC growth generation, or standards like USB, for example. For some opinionated few, backward incompatibility is downright opposed to success.

And then there's Apple.

Apple may not be a shining example of smooth upgrades, but they aren't shy about doing it.

Anyone who knows Apple knows they are just about the least sentimental bastards in the world. They'll throw away all their work on a platform that many were still excited about or discontinue an enormously popular product that was only 18 months old. They have switched underlying operating system kernels, chip architectures, their core operating system API, and notoriously and unceremoniously broken third-party applications on a wide scale with every new OS (both Mac and iOS).

OK, so they aren't entirely unsentimental — Steve Jobs did hold a funeral for Mac OS 9, about a year after OS X replaced it on desktops.

All of this allows Apple to put progress and innovation above all else.

Steve Jobs, in his famous "Thoughts on Flash", made clear his view that Apple doesn't let attachment to anything stand in the way of platform progress:

We know from painful experience that letting a third party layer of software come between the platform and the developer ultimately results in sub-standard apps and hinders the enhancement and progress of the platform. If developers grow dependent on third party development libraries and tools, they can only take advantage of platform enhancements if and when the third party chooses to adopt the new features. We cannot be at the mercy of a third party deciding if and when they will make our enhancements available to our developers.

Jobs also made it clear that this applies to Apple itself. "If you don't cannibalize yourself, someone else will," said Jobs in a quote pulled from the Walter Isaacon biography.

This attitude has paid off for iOS. Analysis of app downloads shows that uptake for new major versions is rapid. iOS 4.0 just barely got going before it was traded in for iOS 5.0.

The story is not the same for Android. MG Seigler reveals (via DF) that Android 4.0.x "Ice Cream Sandwich" has only seen 7.1% uptake in 7 months. As Seigler notes, Google is expected to announce the next version this month, which would likely occur before ICS even sees 10% adoption.

Two Googles

So at one end of the upgrade-nirvana spectrum, we have: Google. And at the other end: Google.

How could they be so different?

A lot of people want to draw tight comparisons between the mobile OS wars going on now and the desktop OS wars that Apple lost in the 80s and 90s.

If we do that, for a minute, we might actually see some similarities. Apple currently supports six iOS devices, with a total history of twelve. By some accounts, there may be as many as 3997 distinct Android devices.

Android is also "winning" in market share, with 51 percent of the US smartphone market vs. iOS's almost 31 percent, according to March 2012 Comscore data. Gartner numbers put Android ahead even further, with 56 and 23 percent, respectively (and this has been the story for some time).

Or is it?

Isn't all smartphone market share equal? What's interesting about these numbers is just how different they are from web usage numbers. Shockingly different.

This report by Forbes puts iOS web market share 62% with Android at 20%.

Similar data comes in when analyzing enterprise usage, as well.

Smartdumbphones

"Smartphone" market share numbers suggest Android is winning, yet certain usage statistics still put Apple in a comfortable lead. Meanwhile, Apple seems quite comfortable blowing away growth estimates again and again while nearly every other device maker is struggling to turn a profit. What does Apple know that the industry doesn't?

Has anyone else noticed a lack of "dumbphones" (non-smartphones) around them lately? Android phones have largely replaced these non-smartphones or feature phones in the "free" section of major cell phone providers' online stores and store shelves alike. This means customers who walk into a store looking for a new phone or a replacement but not knowing (or really caring) what they are shopping for are often ending up with an Android phone — just like they ended up with feature phones in years past.

That's great for market share. But how is it great for business? Generally speaking, these people don't buy apps. They don't promote or evangelize. They aren't repeat customers. Their primary business value is to the service provider; not to the device maker, not to Google.

And they are extremely unlikely to update their operating system.

The good news to Google is that these users who don't upgrade don't represent a large cost to abandon, should they decide to make innovative, backward-compatibility-breaking changes in future versions.

The bad news is that third party developers will be afraid to support new (and possibly risky) versions, or break compatibility with old versions in order to adopt new features. All reasonable version distribution statistics, such as the new Ice Cream Sandwich numbers, show basically no adoption at all. So why take the risk?

I get the feeling that Apple will be happy to continue to innovate and bring new and better features to its customers; and Android will continue to match feature-for-feature on paper, but not where it counts: in the App Store.